Facebook v. Science

Social media have helped us cocoon ourselves into comfortable ignorance of “the other side” — so goes the prevailing notion of the last few years, since Facebook has been king.

A team of researchers at Facebook published an article Thursday that claimed to detail how much the site contributes to political echo chambers or filter-bubbles. Published in the journal Science, their report claimed Facebook’s blackbox newsfeed algorithm weeded out some disagreeable content from readers’ feeds, but not as much as did their personal behavior.

A flurry of criticism came from other social scientists, with one, University of Michigan’s Christian Sandvig, calling it Facebook’s “it’s not our fault” study.

Sample frame

Perhaps the most important limitation to the findings is the small, and unique, subset of users examined. Although the total number was huge (10 million), these were users who voluntarily label their political leanings on their profile, and also log on regularly — only about 4 percent of the total Facebook population, who differ from general users in obvious and subtle ways. Critics have pointed out this crucial detail is relegated to an appendix.

Despite the sample problem, the authors framed their findings by saying they “conclusively establish [them] on average in the context of Facebook […]” [emphasis added].

As University of North Carolina’s Zeynep Tufekci and University of Maryland’s Nathan Jurgenson pointed out, that’s simply inaccurate. The context the Facebook researchers examined was highly skewed, and cannot be generalized.

While the ideal random sample is not always available and convenient samples can tell us much about subpopulations of interest, the sampling selection here confounded the results. Those who are willing to include their political preferences in their Facebook bio are likely to deal with ideologically challenging information in fundamentally different ways than everyone else does.

In spite of this criticism, though, we now know more about that type of user than we did yesterday.

Algorithm vs. personal choice (what they really found, and didn’t)

Another troubling aspect of the study has to do with the way the main finding is presented. The authors write that Facebook’s newsfeed algorithm reduces exposure to cross-cutting material by 8 percent (1 in 13 of such hard-news stories) for self-identified liberals and 5 percent (1 in 20) for conservatives. The researchers also report that these individuals themselves further reduce diverse content exposure by 6 percent among liberals and 17 percent among conservatives.

The comparison of these — algorithm and personal choice — is what caused Sandvig to call this Facebook’s “it’s not our fault” study.

Tufekci and Jurgenson say the authors failed to mention the two effects are additive and cumulative. That individuals make reading choices that contribute to their personal filter-bubble is pretty much unchallenged. Yesterday’s study confirmed that Facebook’s algorithm adds to that, above the psychological baseline. This was not the emphasis of the comparison they made, nor of many headlines covering the study.

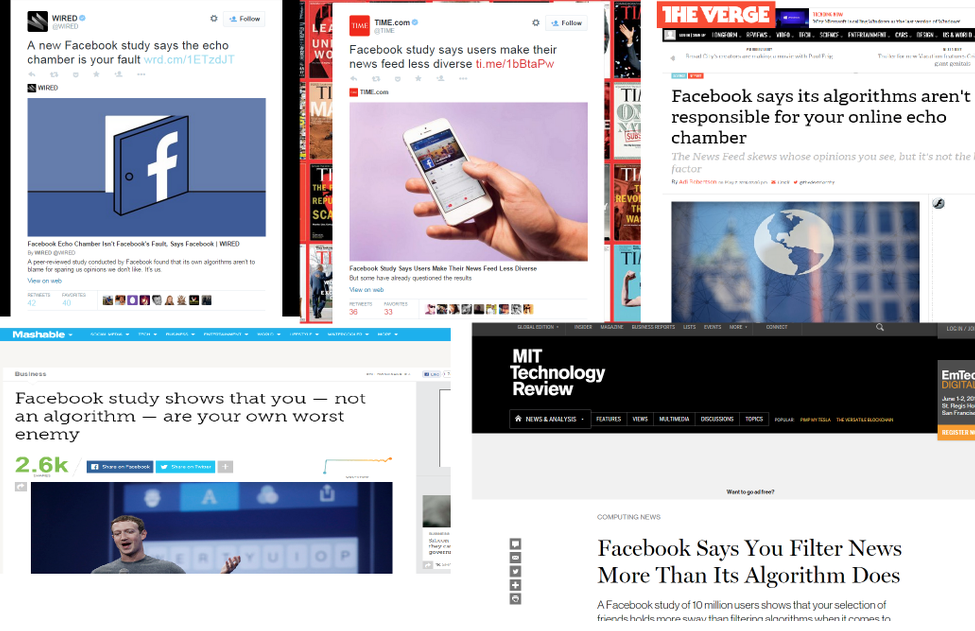

For instance:

Tufecki and Jurgenson also point out the authors apparently have botched the statement of this main finding by claiming “that on average in the context of Facebook individual choices more than algorithms limit exposure to attitude-challenging content.” The findings they report are actually mixed: Self-identified liberals’ exposure was more strongly suppressed by the algorithm than by personal choice (8 percent v. 6 percent), while for conservatives the reverse was true (5 percent v. 17 percent).

Science is iterative

Amid all the blowback in the academic world, especially over the inflated claims of the conclusion, some called for a more dispassionate appraisal. Dartmouth’s Brendan Nyhan, who regularly contributes to New York Times’ Upshot, asked for social scientists to “show we can hold two (somewhat) opposed ideas in our heads at the same on the [Facebook] study.” Translated, the study is important, if flawed.

“Science is iterative!” Nyhan tweeted. “Let’s encourage [Facebook] to help us learn more, not attack them every time they publish research. Risk is they just stop.”

But there are rejoinders to that call as well. As University of Maryland law professor James Grimmelmann pointed out, “‘Conclusively’ doesn’t leave a lot of room for iteration.”

Nyhan’s point, that Facebook could stop publishing its findings given enough criticism also highlights that the study, conducted with their proprietary data, is not replicable, a key ingredient in scientific research.

Journals and journalists

Given the overstated (or misstated) findings, many have called out Science, the journal that published the article. Not only is Science peer-reviewed, but along with Nature is one of the foremost academic journals in the world.

While many of yesterday’s news articles noted the controversy around the publication, others repeated the debated conclusion verbatim. Jurgenson had harsh words for the journal: “Reporters are simply repeating Facebook’s poor work because it was published in Science. [Th]e fault here centrally lies with Science, [which] has decided to trade its own credibility for attention. [K]inda undermines why they exist.”

In the Summer 2014 GJR article, “Should journalists take responsibility for reporting bad science?” I wrote about the responsible parties in such cases. Although social media habits are not as high-stakes as health and medicine, journals, public relations departments and scientists themselves must be more accountable for the information they pass on to journalists and ultimately readers.

Although “post-publication review” is here to stay, the initial gatekeepers should always be the first line of defense against bad science — especially when the journal in question carries the mantle of the entire Scientific enterprise.